The core search architecture of SharePoint 2013 has a more complex and flexible topology that can be changed more efficiently by using Windows PowerShell. Each Search service application has its own search topology. If you create more than one Search service application in a farm, it’s recommended to allocate dedicated servers for the search topology of each Search service application.

In this blog, we will see how to configure topology for one search service application with multiple search components across 2 servers for redundancy and performance.

#==============================================================

#Search Service Application Configuration Settings

#==============================================================

$SearchApplicationPoolName = " SearchApplicationPool"$SearchApplicationPoolAccountName = "Contoso\Administrator"$SearchServiceApplicationName = "Search Service Application"$SearchServiceApplicationProxyName = "Search Service Application Proxy"$DatabaseServer = "2013-SP"$DatabaseName = "SP2013 Search"$IndexLocationServer1 = "D:\SearchIndexServer1"mkdir -Path $IndexLocationServer1 -Force$IndexLocationServer2 = "D:\SearchIndexServer2"mkdir -Path $IndexLocationServer2 -Force

#==============================================================

#Search Application Pool

#==============================================================

Write-Host -ForegroundColor DarkGray "Checking if Search Application Pool exists"$SPServiceApplicationPool = Get-SPServiceApplicationPool -Identity$SearchApplicationPoolName -ErrorAction SilentlyContinueif (!$SPServiceApplicationPool){ Write-Host -ForegroundColor Yellow "Creating Search Application Pool"$SPServiceApplicationPool = New-SPServiceApplicationPool -Name$SearchApplicationPoolName -Account $SearchApplicationPoolAccountName -Verbose}

#==============================================================

#Search Service Application

#==============================================================

Write-Host -ForegroundColor DarkGray "Checking if SSA exists"$SearchServiceApplication = Get-SPEnterpriseSearchServiceApplication-Identity $SearchServiceApplicationName -ErrorAction SilentlyContinueif (!$SearchServiceApplication){ Write-Host -ForegroundColor Yellow "Creating Search Service Application"$SearchServiceApplication = New-SPEnterpriseSearchServiceApplication -Name$SearchServiceApplicationName -ApplicationPool $SPServiceApplicationPool.Name-DatabaseServer $DatabaseServer -DatabaseName $DatabaseName}Write-Host -ForegroundColor DarkGray "Checking if SSA Proxy exists"$SearchServiceApplicationProxy = Get-SPEnterpriseSearchServiceApplicationProxy-Identity $SearchServiceApplicationProxyName -ErrorAction SilentlyContinueif (!$SearchServiceApplicationProxy){ Write-Host -ForegroundColor Yellow "Creating SSA Proxy"New-SPEnterpriseSearchServiceApplicationProxy -Name$SearchServiceApplicationProxyName -SearchApplication$SearchServiceApplicationName}

#==============================================================

#Start Search Service Instance on Server1

#==============================================================

$SearchServiceInstanceServer1 = Get-SPEnterpriseSearchServiceInstance -local Write-Host -ForegroundColor DarkGray "Checking if SSI is Online on Server1" if($SearchServiceInstanceServer1.Status -ne "Online") { Write-Host -ForegroundColor Yellow "Starting Search Service Instance" Start-SPEnterpriseSearchServiceInstance -Identity $SearchServiceInstanceServer1 While ($SearchServiceInstanceServer1.Status -ne "Online") { Start-Sleep -s 5 } Write-Host -ForegroundColor Yellow "SSI on Server1 is started" }

#==============================================================

#Start Search Service Instance on Server2

#==============================================================

$SearchServiceInstanceServer2 = Get-SPEnterpriseSearchServiceInstance -Identity

"2013-SP-AFCache" Write-Host -ForegroundColor DarkGray "Checking if SSI is Online on Server2" if($SearchServiceInstanceServer2.Status -ne "Online") { Write-Host -ForegroundColor Yellow "Starting Search Service Instance" Start-SPEnterpriseSearchServiceInstance -Identity $SearchServiceInstanceServer2 While ($SearchServiceInstanceServer2.Status -ne "Online") { Start-Sleep -s 5 } Write-Host -ForegroundColor Yellow "SSI on Server2 is started" }

#==============================================================

#Cannot make changes to topology in Active State.#Create new topology to add components

#============================================================== $InitialSearchTopology = $SearchServiceApplication |

Get-SPEnterpriseSearchTopology -Active

$NewSearchTopology = $SearchServiceApplication | New-SPEnterpriseSearchTopology

#==============================================================

#Search Service Application Components on Server1#Creating all components except Index (created later)

#==============================================================

New-SPEnterpriseSearchAnalyticsProcessingComponent -SearchTopology

$NewSearchTopology -SearchServiceInstance $SearchServiceInstanceServer1 New-SPEnterpriseSearchContentProcessingComponent -SearchTopology

$NewSearchTopology -SearchServiceInstance $SearchServiceInstanceServer1 New-SPEnterpriseSearchQueryProcessingComponent -SearchTopology

$NewSearchTopology -SearchServiceInstance $SearchServiceInstanceServer1 New-SPEnterpriseSearchCrawlComponent -SearchTopology $NewSearchTopology

-SearchServiceInstance $SearchServiceInstanceServer1

New-SPEnterpriseSearchAdminComponent -SearchTopology $NewSearchTopology

-SearchServiceInstance $SearchServiceInstanceServer1

#==============================================================

#Search Service Application Components on Server2.#Crawl, Query, and CPC

#==============================================================

New-SPEnterpriseSearchContentProcessingComponent -SearchTopology

$NewSearchTopology -SearchServiceInstance $SearchServiceInstanceServer2 New-SPEnterpriseSearchQueryProcessingComponent -SearchTopology

$NewSearchTopology -SearchServiceInstance $SearchServiceInstanceServer2 New-SPEnterpriseSearchCrawlComponent -SearchTopology

$NewSearchTopology -SearchServiceInstance $SearchServiceInstanceServer2

Server1

|

Primary

|

Server2

|

Primary

|

|

IndexPartition 0

|

IndexComponent 1

|

True

|

IndexComponent 2

|

False

|

IndexPartition 1

|

IndexComponent 3

|

False

|

IndexComponent 4

|

True

|

IndexPartition 2

|

IndexComponent 5

|

True

|

IndexComponent 6

|

False

|

IndexPartition 3

|

IndexComponent 7

|

False

|

IndexComponent 8

|

True

|

#==============================================================

#Index Components with replicas

#==============================================================

New-SPEnterpriseSearchIndexComponent -SearchTopology $NewSearchTopology

-SearchServiceInstance $SearchServiceInstanceServer1 -IndexPartition 0

-RootDirectory $IndexLocationServer1

New-SPEnterpriseSearchIndexComponent -SearchTopology $NewSearchTopology

-SearchServiceInstance $SearchServiceInstanceServer2 -IndexPartition 0

-RootDirectory $IndexLocationServer2

New-SPEnterpriseSearchIndexComponent -SearchTopology $NewSearchTopology

-SearchServiceInstance $SearchServiceInstanceServer2 -IndexPartition 1

-RootDirectory $IndexLocationServer2

New-SPEnterpriseSearchIndexComponent -SearchTopology $NewSearchTopology

-SearchServiceInstance $SearchServiceInstanceServer1 -IndexPartition 1

-RootDirectory $IndexLocationServer1

New-SPEnterpriseSearchIndexComponent -SearchTopology $NewSearchTopology

-SearchServiceInstance $SearchServiceInstanceServer1 -IndexPartition 2

-RootDirectory $IndexLocationServer1

New-SPEnterpriseSearchIndexComponent -SearchTopology $NewSearchTopology

-SearchServiceInstance $SearchServiceInstanceServer2 -IndexPartition 2

-RootDirectory $IndexLocationServer2

New-SPEnterpriseSearchIndexComponent -SearchTopology $NewSearchTopology

-SearchServiceInstance $SearchServiceInstanceServer2 -IndexPartition 3

-RootDirectory $IndexLocationServer2

New-SPEnterpriseSearchIndexComponent -SearchTopology $NewSearchTopology

-SearchServiceInstance $SearchServiceInstanceServer1 -IndexPartition 3

-RootDirectory $IndexLocationServer1

#==============================================================

#Setting Search Topology using Set-SPEnterpriseSearchTopology

#==============================================================

Set-SPEnterpriseSearchTopology -Identity $NewSearchTopology

#==============================================================

#Clean-Up Operation

#==============================================================

Write-Host -ForegroundColor DarkGray "Deleting old topology"

Remove-SPEnterpriseSearchTopology -Identity $InitialSearchTopology

-Confirm:$false

Write-Host -ForegroundColor Yellow "Old topology deleted"

#==============================================================

#Check Search Topology

#==============================================================

Get-SPEnterpriseSearchStatus -SearchApplication $SearchServiceApplication -Text

Write-Host -ForegroundColor Yellow "Search Service Application and Topology

is configured!!"

$Server02 = (Get-SPServer "2013-SPHost2").Name

$EnterpriseSearchserviceApplication = Get-SPEnterpriseSearchserviceApplication

$ActiveTopology = $EnterpriseSearchserviceApplication.ActiveTopology.Clone()

$IndexComponent =(New-Object Microsoft.Office.Server.Search.Administration.Topology.IndexComponent

$Server02,1);

$IndexComponent.RootDirectory = "D:\IndexComponent02"

# Server1 is the local server where the script is run.

For fault-tolerance we need to have at least two index components (replicas) for an index partition. Here I create 4 index partition with 8 index components. One index partition can serve up to 10 million items. As a good practice, the primary and secondary replicas should be balanced among the index servers. So we will have Server1 hosting 4 index component (out of which 2 will be primary replicas) and Server2 hosting other 4 index components (2 primary replicas).

* Please note that above cmdlets will not create the primary replicas in the server we want as expected as we are running all the cmdlets at same time without saving the topology. Ideally we should create an index partition in one server and then run Set-SPEnterpriseSearchTopology. This will ensure that the primary replica is created in the server we want. The next time you run the same cmdlet in another server for same index partition will create secondary replica. For more details - http://blogs.technet.com/b/speschka/archive/2012/12/02/adding-a-new-search-partition-and-replica-in-sharepoint-2013.aspx

When the above script is run one after the other to create multiple index partitions and replicas in different servers, you can see in the picture below there is no particular order for creation of replicas in the servers. The Primary and secondary replicas are not created in the servers that we wanted. If you are concerned about primary index component server location, then you should set the topology before you run the cmdlet to create secondary replica in another server.

Now that all search components are created in our new topology. Before setting our new topology we need to activate this topology. Remember that we also have an old topology which is in active state.

We will use Set-SPEnterpriseSearchTopology cmdlet which does some important tasks - Activates the NewTopology [$NewSearchTopology.Activate()], deactivates all other active topologies and sets the NewTopology(Active) as the current Enterprise Search Topology

#==============================================================

#Setting Search Topology using Set-SPEnterpriseSearchTopology

#==============================================================

Set-SPEnterpriseSearchTopology -Identity $NewSearchTopology

After running Set-SPEnterpriseSearchTopology cmdlet, it will look like

As Set-SPEnterpriseSearchTopology has already done most of the job for us we will do one last thing - delete the old topology as its no longer required.

#==============================================================

#Clean-Up Operation

#==============================================================

Write-Host -ForegroundColor DarkGray "Deleting old topology"

Remove-SPEnterpriseSearchTopology -Identity $InitialSearchTopology

-Confirm:$false

Write-Host -ForegroundColor Yellow "Old topology deleted"

When $SearchServiceApplication | Get-SPEnterpriseSearchTopology cmdlet is run, you will find just one topology (new topology that we created)

#==============================================================

#Check Search Topology

#==============================================================

Get-SPEnterpriseSearchStatus -SearchApplication $SearchServiceApplication -Text

Write-Host -ForegroundColor Yellow "Search Service Application and Topology

is configured!!"

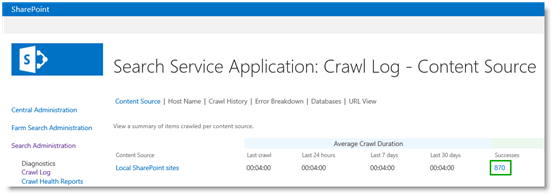

In Central administration, Search service application you will find topology like this;

In your environment to know the numbers for each search components, use this scaling guidelines.

* The New-SPEnterpriseSearchIndexComponent requires folder for storing index files. In multiple server search configuration scenario, New-SPEnterpriseSearchIndexComponent checks the existence of RootDirectory in the wrong server. It checks the existence of the folder only in the machine where PowerShell script is executed; even in those cases when new index component is scheduled for other machine. You will get an error message, New-SPEnterpriseSearchIndexComponent : Cannot bind parameter 'RootDirectory'

There are 2 workarounds for this;

1. Create the folder manually in the machine that runs powershell script as its done in the aforementioned script.

2. Use directly the SP Object model instead of cmdlets.

$Server02 = (Get-SPServer "2013-SPHost2").Name

$EnterpriseSearchserviceApplication = Get-SPEnterpriseSearchserviceApplication

$ActiveTopology = $EnterpriseSearchserviceApplication.ActiveTopology.Clone()

$IndexComponent =(New-Object Microsoft.Office.Server.Search.Administration.Topology.IndexComponent

$Server02,1);

$IndexComponent.RootDirectory = "D:\IndexComponent02"

$ActiveTopology.AddComponent($IndexComponent)

* A note on removing an index component - If you have more than one active index replica for an index partition, you can remove an index replica by performing the procedure Remove a search component in the article Manage search components in SharePoint Server 2013. You cannot remove the last index replica of an index partition using this procedure. If you have to remove all index replicas from the search topology, you must remove and re-create the Search service application and create a completely new search topology that has the reduced number of index partitions.